The voice-UI market is on track to reach $30.46 billion this year, up from $25.25 billion in 2024. There are now 8.4 billion voice assistants globally—more than there are people on Earth. Yet we’re still in the early innings of voice as a primary interface for work.

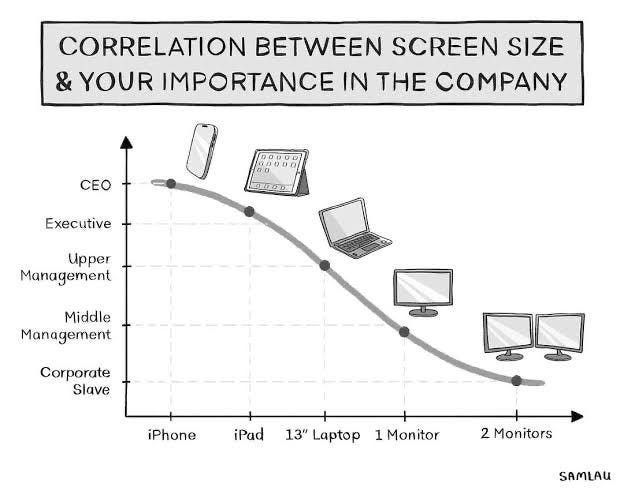

Consider this tension: while 57% of time spent online happens on mobile devices, 68% of knowledge work still occurs on desktop computers. This gap represents a fundamental mismatch between where people are and where their tools assume they’ll be. For startups building voice-first products, the opportunity is wide open.

Voice Agents

Corporate software was architected for a bygone era: 9-to-5 employees anchored to rigid organizational hierarchies, deliberate approval chains, and IT rollouts optimized for stability over speed. The design assumptions baked into most enterprise tools reflect this old reality.

Today’s workforce bears little resemblance to that model. It’s hybrid, distributed, and increasingly global—professionals constantly on the move, juggling cross-functional projects from MacBooks in coffee shops and iPhones across time zones. The new paradigm rewards speed, context-switching ability, and tool flexibility above all else.

Voice eliminates the friction that slows down distributed work. No app-switching lag, no “I’ll handle this when I’m back at my desk” delays, no breaking of cognitive flow. You can capture thoughts, issue commands, and take action in real time whether you’re in an Uber between meetings, working from a coworking space, or standing at your kitchen counter.

The interface disappears, which is the entire point.

The Hardware

The next evolution of voice computing is already in your ears.

AirPods and similar wireless earbuds have quietly assembled the largest live-microphone network in human history. They’re worn for hours at a time, always on, always listening (when activated), transforming millions of ears into ambient voice input surfaces. This technology is already deployed at scale.

These devices enable instant capture and orchestration of work: dictate notes, transcribe meetings, issue commands, trigger workflows. Pair AirPods with an Apple Watch and the phone dependency begins to dissolve entirely, shifting voice control to your wrist. Against this backdrop, traditional laptops and desktop computers increasingly look like legacy input surfaces—still valuable for their large screens and processing power, but no longer the gravitational center of how work gets started or finished. The hardware infrastructure is embedded in daily life.

The software layer is finally beginning to catch up.

The Software

Microphones are now in everything we own, but NLP is what turns these passive ‘ears’ into a powerful ‘action layer,’ ready to execute tasks on command.

This is a quantum leap past the clunky, robotic commands of early assistants like Siri and Alexa. Today’s language models process intent, context, and even tone in a fraction of a second. The system intelligently checks calendars, navigates conflicts, suggests times, and sends the invites, all from that single sentence.

This is creating a whole new class of tools, freeing productivity from the limits of typing speed and screen size. The new measure of efficiency is simply how clearly we can speak our intent.

The Startups

Over 190 Y Combinator-backed startups are currently building pieces of the voice-driven future of productivity. The diversity of approaches is striking—everything from meeting assistants to voice-controlled browsers to ambient note-takers. Here are three companies pushing the boundaries of what’s possible:

April

April is a voice-driven executive assistant app that manages email and calendar tasks hands-free. It’s aimed at busy professionals without full-time support. The app leverages voice commands to triage and respond to messages on the go (users can clear 100+ emails during a commute).

Blue

Blue bills itself as the first voice AI assistant that can full control any IOS or Android app. Using a tiny USB-C “Bud” accessory to physically tap, type, and swipe for the user, it lets commuters and multitaskers speak commands (like “catch me up on Slack” or “pay my power bill from my email”) and have Blue navigate apps and complete tasks hands-free, with no special integrations needed.

Willow

Will is a macOS AI dictation tool positioned as a “voice interface replacing your keyboard.” It is aimed at heavy typists (engineers, managers, sales teams) and adds context-awareness and live formatting to voice input. It claims up to a 4× speedup over typing and 40% better transcription accuracy than built-in tools.

What Comes Next?

The infrastructure is already in place: billions of devices come standard with high-quality microphones. People have become comfortable talking to machines. The behavioral patterns exist and are strengthening. The language models have reached competence thresholds that make voice-driven workflows genuinely reliable rather than frustrating.

What we’re witnessing now is voice transitioning from input method to operating layer—a substrate through which work gets captured, routed, and completed. When that shift happens, productivity won’t be tied to screens anymore. It’ll be woven into how we move through the day.

The question now is how quickly the software layer will catch up to the hardware reality already in our pockets, on our wrists, and in our ears.

Voice is the way of the future.

✌🏽SR